Currently RDF data is stored and sent in very verbose textual serialization formats that waste a lot of bandwidth and are expensive to parse and index. If RDF is meant to be machine understandable, why not use an appropriate format for that?

HDT (Header, Dictionary, Triples) is a compact data structure and binary serialization format for RDF that keeps big datasets compressed to save space while maintaining search and browse operations without prior decompression. This makes it an ideal format for storing and sharing RDF datasets on the Web.

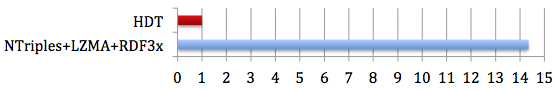

Comparison of HDT against traditional techniques regarding the time to download and start querying a dataset. More details

Some facts about HDT:

- The size of the files is smaller than other RDF serialization formats. This means less bandwidth costs for the provider, but also less waiting time for the consumers to download.

- The HDT file is already indexed. The users of RDF dumps want to do something useful with the data. By using HDT, they download the file and start browsing/querying in minutes, instead of wasting time using parsing and indexing tools that they never remember how to setup and tune.

- High performance querying. Usually the bottleneck of databases is slow disk access. The internal compression techniques of HDT allow that most part of the data (or even the whole dataset) can be kept in main memory, which is several orders of magnitude faster than disks.

- Highly concurrent. HDT is read-only, so it can dispatch many queries per second using multiple threads.

- The format is open and in progress of standardization (W3C HDT Member Submission). This ensures that anyone on the Web can generate and consume files, or even write their own implementation.

- The libraries are open source (LGPL). You can adapt the libraries to your needs, and the community can spot and fix issues.

Some use cases where HDT stands out:

- Sharing RDF data on the Web. Your dataset is compact and ready to be used by the consumers.

- Data Analysis and Visualization. Tasks such as statistics extraction, data mining and clustering, require exhaustive traversals over the data. In these cases is much more convenient to have all the data locally rather than rely on slow remote SPARQL endpoints.

- Setting up a mirror of an endpoint. Just download the HDT and launch the HDT Sparql Endpoint software.

- Smartphones and Embedded Devices. They are very restricted in terms of CPU and Storage so it is important to use appropriate data formats to use resources efficiently when developing Semantic Applications.

- Federated Querying. Federated Query Engines can directly download big parts of frequently-accessed data sources and keep them locally cached. Thanks to HDT both the transmission and storage are cheap, and querying the cached block is very fast.

We provide the following tools:

- C++ and Java libraries / command line tools to create and search HDT files.

- HDT-it! GUI to create and browse HDT files from your desktop, including a 3D Visualization of the RDF adjacency matrix of the RDF Graph.

- Jena Integration to have a Jena Model on top of a given HDT file. This allows using Jena ARQ to issue SPARQL queries and also makes it possible to set up a Fuseki SPARQL endpoint by specifying one or more HDT files as named graphs.

- Online Web Service to convert RDF files to HDT. Source available at hdt-online. Thanks to Michael Hausenblas for developing the tool.

To know more about the internals of HDT, please read the technical specification document.